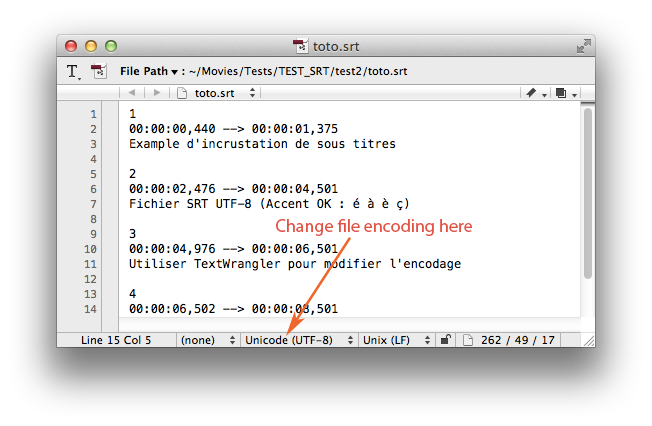

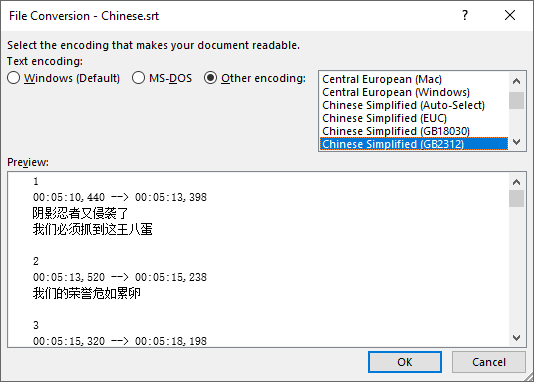

TensorFlow provides operations to convert between these different representations: # Unicode string, represented as a vector of Unicode code points. # Unicode string, represented as a UTF-16-BE encoded string scalar. int32 vector - where each position contains a single code point.įor example, the following three values all represent the Unicode string "语言处理" (which means "language processing" in Chinese): # Unicode string, represented as a UTF-8 encoded string scalar.string scalar - where the sequence of code points is encoded using a known character encoding.There are two standard ways to represent a Unicode string in TensorFlow:

If you use Python to construct strings, note that string literals are Unicode-encoded by default. The string length is not included in the tensor dimensions. This enables it to store byte strings of varying lengths. tf.constant(u"Thanks 😊")Ī tf.string tensor treats byte strings as atomic units. Unicode strings are utf-8 encoded by default. The basic TensorFlow tf.string dtype allows you to build tensors of byte strings. It separates Unicode strings into tokens based on script detection. This tutorial shows how to represent Unicode strings in TensorFlow and manipulate them using Unicode equivalents of standard string ops. A Unicode string is a sequence of zero or more code points. Every Unicode character is encoded using a unique integer code point between 0 and 0x10FFFF. Unicode is a standard encoding system that is used to represent characters from almost all languages. NLP models often handle different languages with different character sets.

0 kommentar(er)

0 kommentar(er)